Regression

MATH 4720/MSSC 5720 Introduction to Statistics

Department of Mathematical and Statistical Sciences

Marquette University

Regression

What is Regression

Regression models the relationship between one or more numerical/categorical response variables \((Y)\) and one or more numerical/categorical explanatory variables \((X)\).

A regression function \(f(X)\) describes how a response variable \(Y\), on average, changes as an explanatory variable \(X\) changes.

Examples:

college GPA \((Y)\) vs. ACT/SAT score \((X)\)

sales \((Y)\) vs. advertising expenditure \((X)\)

crime rate \((Y)\) vs. median income level \((X)\)

Unknown Regression Function

The true relationship between \(X\) and the mean of \(Y\), the regression function \(f(X)\), is unknown.

The collected data \((x_1, y_1), (x_2, y_2), \dots, (x_n, y_n)\) is all we know and have.

Goal: estimate \(f(X)\) from our data and use it to predict value of \(Y\) given any value of \(X\).

Simple Linear Regression

- Start with simple linear regression:

- Only one predictor \(X\) (known and constant) and one response variable \(Y\)

- the regression function used for predicting \(Y\) is a linear function.

- use a regression line in a X-Y plane to predict the value of \(Y\) for a given value of \(X = x\).

Math review: A linear function \(y = f(x) = \beta_0 + \beta_1 x\) represents a straight line

\(\beta_1\): slope, the amount by which \(y\) changes when \(x\) increases by one unit.

\(\beta_0\): intercept, the value of \(y\) when \(x = 0\).

The linearity assumption: \(\beta_1\) does not change as \(x\) changes.

Sample Data: Relationship Between X and Y

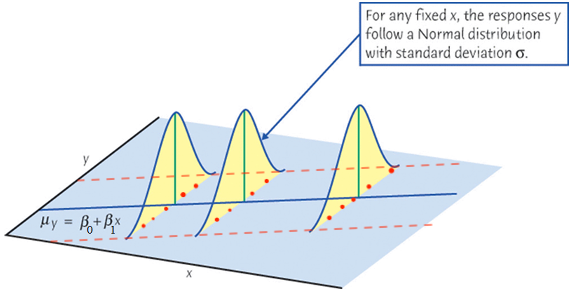

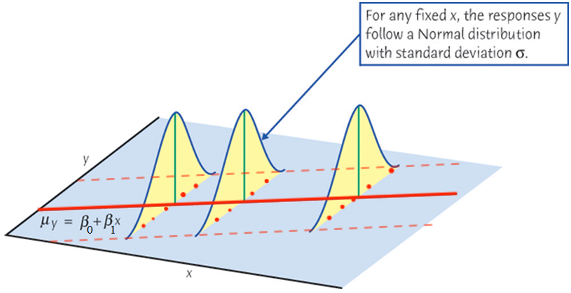

Real data \((x_i, y_i), i = 1, 2, \dots, n\) do not form a perfect straight line!

\(y_i = \beta_0+\beta_1x_i + \color{red}{\epsilon_i}\)

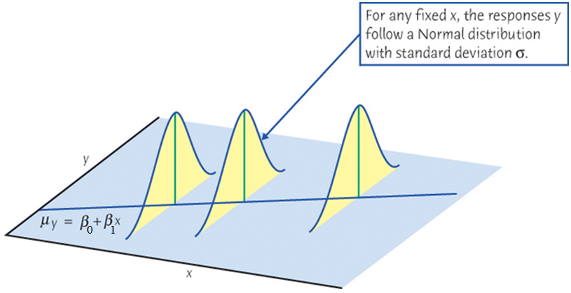

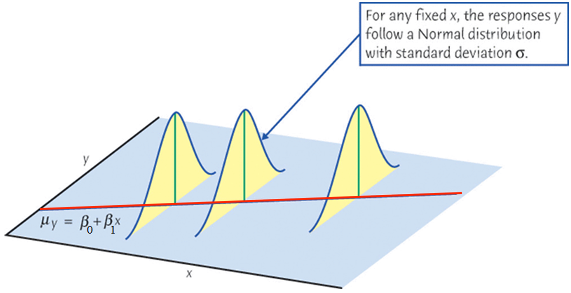

When we collect our data, at any given level of \(X = x\), \(y\) is assumed being drawn from a normal distribution (for inference purpose).

Its value varies around and will not be exactly equal to its mean \(\mu_y\).

When we collect our data, at any given level of \(X = x\), \(y\) is assumed being drawn from a normal distribution (for inference purpose).

Its value varies around and will not be exactly equal to its mean \(\mu_y\).

- The mean of \(Y\) and \(X\) form a straight line.

Simple Linear Regression Model (Population)

For the \(i\)-th measurement in the target population,

\[Y_i = \beta_0 + \beta_1X_i + \epsilon_i\]

\(Y_i\): the \(i\)-th value of the response (random) variable.

\(X_i\): the \(i\)-th known fixed value of the predictor.

\(\epsilon_i\): the \(i\)-th random error with assumption \(\epsilon_i \stackrel{iid}{\sim} N(0, \sigma^2)\).

\(\beta_0\) and \(\beta_1\) are model coefficients.

\(\beta_0\), \(\beta_1\) and \(\sigma^2\) are fixed unknown parameters to be estimated from the sample data once we collect them.

Important Features of Model \(Y_i = \beta_0 + \beta_1X_i + \epsilon_i\)

\(\epsilon_i \stackrel{iid}{\sim} N(0, \sigma^2)\)

\[\begin{align*} \mu_{Y_i \mid X_i} = \beta_0 + \beta_1X_i \end{align*}\]

The mean response \(\mu_{Y\mid X}\) has a straight-line relationship with \(X\) given by a population regression line \[\mu_{Y\mid X} = \beta_0 + \beta_1X\]

Important Features of Model \(Y_i = \beta_0 + \beta_1X_i + \epsilon_i\)

\(\epsilon_i \stackrel{iid}{\sim} N(0, \sigma^2)\) \[\begin{align*} \mathrm{Var}(Y_i \mid X_i) &= \mathrm{Var}(\epsilon_i) = \sigma^2 \end{align*}\] The variance of \(Y\) does not depend on \(X\).

Important Features of Model \(Y_i = \beta_0 + \beta_1X_i + \epsilon_i\)

\(\epsilon_i \stackrel{iid}{\sim} N(0, \sigma^2)\) \[\begin{align*} Y_i \mid X_i \stackrel{indep}{\sim} N(\beta_0 + \beta_1X_i, \sigma^2) \end{align*}\] For any fixed value of \(X_i = x_i\), the response \(Y_i\) varies with \(N(\mu_{Y_i\mid x_i}, \sigma^2)\).

- Job: Collect data and estimate the unknown parameters \(\beta_0\), \(\beta_1\) and \(\sigma^2\)!

Idea of Fitting

- Interested in \(\beta_0\) and \(\beta_1\) in the following sample regression model: \[\begin{align*} y_i = \beta_0 + \beta_1~x_{i} + \epsilon_i, \end{align*}\]

Use sample statistics \(b_0\) and \(b_1\) computed from our sample data to estimate \(\beta_0\) and \(\beta_1\).

\(\hat{y}_{i} = b_0 + b_1~x_{i}\) is called fitted value of \(y_i\), a point estimate of the mean \(\mu_{y|x_i}\) and \(y_i\) itself.

Fitting a Regression Line \(\hat{Y} = b_0 + b_1X\)

Given the sample data \(\{ (x_1, y_1), (x_2, y_2), \dots, (x_n, y_n)\},\)

Which sample regression line is the best?

What are the best estimators \(b_0\) and \(b_1\) for \(\beta_0\) and \(\beta_1\)?

Fitting a Regression Line \(\hat{Y} = b_0 + b_1X\)

Given the sample data \(\{ (x_1, y_1), (x_2, y_2), \dots, (x_n, y_n)\},\)

Which sample regression line is the best?

What are the best estimators \(b_0\) and \(b_1\) for \(\beta_0\) and \(\beta_1\)?

What does “best” mean? Ordinary Least Squares (OLS)

- Choose \(b_0\) and \(b_1\), or sample regression line \(b_0 + b_1x\) that minimizes the sum of squared residuals \(SS_{res}\)

\[SS_{res} = e_1^2 + e_2^2 + \dots + e_n^2 = \sum_{i = 1}^n e_i^2,\] where the residual \(e_i = y_i - \hat{y}_i = y_i - (b_0 + b_1x_i)\).

- If \(b_0\) and \(b_1\) are the best estimators,

\[\small{\begin{align} SS_{res} &= (y_1 - b_0 - b_1x_1)^2 + (y_2 - b_0 - b_1x_2)^2 + \dots + (y_n - b_0 - b_1x_n)^2\\ &= \sum_{i=1}^n(y_i - b_0 - b_1x_i)^2 \end{align}}\] that is the smallest comparing to any other \(SS_{res} = \sum_{i=1}^n(y_i - a_0 - a_1x_i)^2\) that uses another pair of values \((a_0, a_1) \ne (b_0, b_1)\).

Visualizing Residuals

Visualizing Residuals (cont.)

Visualizing Residuals (cont.)

Least Squares Estimates (LSE)

- The least squares approach choose \(b_0\) and \(b_1\) to minimize the \(SS_{res}\), i.e.,

\[(b_0, b_1) = \arg \min_{\alpha_0, \alpha_1} \sum_{i=1}^n(y_i - \alpha_0 - \alpha_1x_i)^2\]

MATH 1450/1455 …

\[\color{red}{b_0 = \overline{y} - b_1\overline{x}}\]

\[\color{red}{b_1 = \frac{\sum_{i=1}^n(x_i - \overline{x})(y_i - \overline{y})}{\sum_{i=1}^n(x_i - \overline{x})^2} = r \frac{\sqrt{S_{yy}}}{\sqrt{S_{xx}}}},\] where \(S_{xx} = \sum_{i=1}^n(x_i - \overline{x})^2\) and \(S_{yy} = \sum_{i=1}^n(y_i - \overline{y})^2\)

What can we learn from the formula of \(b_0\) and \(b_1\)?

R Lab: mpg Data

# A tibble: 234 × 11

manufacturer model displ year cyl trans drv cty hwy fl class

<chr> <chr> <dbl> <int> <int> <chr> <chr> <int> <int> <chr> <chr>

1 audi a4 1.8 1999 4 auto… f 18 29 p comp…

2 audi a4 1.8 1999 4 manu… f 21 29 p comp…

3 audi a4 2 2008 4 manu… f 20 31 p comp…

4 audi a4 2 2008 4 auto… f 21 30 p comp…

5 audi a4 2.8 1999 6 auto… f 16 26 p comp…

6 audi a4 2.8 1999 6 manu… f 18 26 p comp…

7 audi a4 3.1 2008 6 auto… f 18 27 p comp…

8 audi a4 quattro 1.8 1999 4 manu… 4 18 26 p comp…

9 audi a4 quattro 1.8 1999 4 auto… 4 16 25 p comp…

10 audi a4 quattro 2 2008 4 manu… 4 20 28 p comp…

# ℹ 224 more rows

R Lab: Highway MPG hwy vs. Displacement displ

plot(x = mpg$displ, y = mpg$hwy,

las = 1, pch = 19, col = "navy", cex = 0.5,

xlab = "Displacement (litres)", ylab = "Highway MPG",

main = "Highway MPG vs. Engine Displacement (litres)")

R Lab: Fit Simple Linear Regression

## all elements in reg_fit

names(reg_fit) [1] "coefficients" "residuals" "effects" "rank"

[5] "fitted.values" "assign" "qr" "df.residual"

[9] "xlevels" "call" "terms" "model" ## use $ to extract an element of a list

reg_fit$coefficients(Intercept) displ

35.70 -3.53 \(\widehat{hwy}_{i} = b_0 + b_1 \times displ_{i} = 35.7 - 3.5 \times displ_{i}\)

\(b_1\): For one unit (litre) increase of the displacement, we expect the highway MPG to be decreased, on average, by 3.5.

R Lab Fitted Values of \(y\)

R Lab Add a Regression Line

Estimation for \(\sigma^2\)

Think of \(\sigma^2\) as variance around the line or the mean square (prediction) error.

The estimate of \(\sigma^2\) is the mean square residual \(MS_{res}\):

\[\hat{\sigma}^2 = MS_{res} = \frac{SS_{res}}{n-2} = \frac{\sum_{i=1}^n(y_i - \hat{y}_i)^2}{n-2}\]

- \(MS_{res}\) is often shown in computer output as \(\texttt{MS(Error)}\) or \(\texttt{MS(Residual)}\).

R Lab Standard Error of Regression

(summ_reg_fit <- summary(reg_fit))

Call:

lm(formula = hwy ~ displ, data = mpg)

Residuals:

Min 1Q Median 3Q Max

-7.104 -2.165 -0.224 2.059 15.010

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 35.698 0.720 49.5 <2e-16 ***

displ -3.531 0.195 -18.1 <2e-16 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Residual standard error: 3.84 on 232 degrees of freedom

Multiple R-squared: 0.587, Adjusted R-squared: 0.585

F-statistic: 329 on 1 and 232 DF, p-value: <2e-16R Lab Standard Error of Regression

# lots of fitted information saved in summary(reg_fit)!

names(summ_reg_fit) [1] "call" "terms" "residuals" "coefficients"

[5] "aliased" "sigma" "df" "r.squared"

[9] "adj.r.squared" "fstatistic" "cov.unscaled" # residual standard error (sigma_hat)

summ_reg_fit$sigma[1] 3.84[1] 3.84Confidence Interval for \(\beta_1\)

- \(\frac{b_1 - \beta_1}{\sqrt{\hat{\sigma}^2/S_{xx}}} \sim t_{n-2}\)

- \((1-\alpha)100\%\) CI for \(\beta_1\) is \(b_1 \pm t_{\alpha/2, n-2}\sqrt{\hat{\sigma}^2/S_{xx}}\)

confint(reg_fit, level = 0.95) 2.5 % 97.5 %

(Intercept) 34.28 37.12

displ -3.91 -3.15Hypothesis Testing: \(\beta_1\)

\(H_0: \beta_1 = \beta_1^0 \quad H_1: \beta_1 \ne \beta_1^0\)

Test statistic: Under \(H_0\),

\[t_{test} = \frac{b_1 - \color{red}{\beta_1^0}}{\sqrt{\frac{\hat{\sigma}^2}{S_{xx}}}} \sim t_{n-2}\]

- Reject \(H_0\) in favor of \(H_1\) if

- \(|t_{test}| > t_{\alpha/2, \, n-2}\)

- \(\text{p-value} = 2P(t_{n-2} > |t_{test}|) < \alpha\)

R Lab Testing on \(\beta_0\) and \(\beta_1\)

summ_reg_fit$coefficients Estimate Std. Error t value Pr(>|t|)

(Intercept) 35.70 0.720 49.6 2.12e-125

displ -3.53 0.195 -18.2 2.04e-46- Testing \(H_0: \beta_0 = 0\) and \(H_0: \beta_1 = 0\)

Interpretation of Testing Results

\(H_0: \beta_1 = 0 \quad H_1: \beta_1 \ne 0\)

Failing to reject \(H_0: \beta_1 = 0\) implies there is no linear relationship between \(Y\) and \(X\).

If we reject \(H_0: \beta_1 = 0\), does it mean \(X\) and \(Y\) are linearly related?

Test of Significance of Regression

-

Rejecting \(H_0: \beta_1 = 0\) could mean

- the straight-line model is adequate

- better results could be obtained with a more complicated model

Analysis of Variance (ANOVA) Approach

\(X\) - \(Y\) Relationship Explains Some Deviation

Suppose we only have data \(Y\) and have no information about \(X\) and relationship between \(X\) and \(Y\). How do we predict a value of \(Y\)?

Our best guess would be \(\overline{y}\) if the data have no pattern, i.e., \(\hat{y}_i = \overline{y}\).

Treat \(X\) and \(Y\) as uncorrelated.

The (total) deviation from the mean is \((y_i - \overline{y})\)

If \(X\) and \(Y\) are linearly related, fitting a linear regression model helps us predict the value of \(Y\) when the value of \(X\) is provided.

\(\hat{y}_i = b_0 + b_1x_i\) is closer to \(y_i\) than \(\overline{y}\).

The regression model explains some deviation of \(y\).

Partition of Deviation

Total deviation = Deviation explained by regression + Unexplained deviation

\((y_i - \overline{y}) = (\hat{y}_i - \overline{y}) + (y_i - \hat{y}_i)\)

\((19 - 9) = (13 - 9) + (19 - 13)\)

Sum of Squares (SS)

\(\sum_{i=1}^n(y_i - \overline{y})^2 = \sum_{i=1}^n(\hat{y}_i - \overline{y})^2 + \sum_{i=1}^n(y_i - \hat{y}_i)^2\)

Total SS \((SS_T)\) = Regression SS \((SS_R)\) + Residual SS \((SS_{res})\)

\(df_T = df_R + df_{res}\)

\(\color{blue}{(n-1) = 1 +(n-2)}\)

ANOVA for Testing Significance of Regression

A larger value of \(F_{test}\) indicates that regression is significant.

-

Reject \(H_0\) if

- \(F_{test} > F_{\alpha, 1, n-2}\)

- \(\text{p-value} = P(F_{1, n-2} > F_{test}) < \alpha\).

The ANOVA is designed to test \(H_0\) that all predictors have no value in predicting \(y\).

In SLR, the \(F\)-test of ANOVA gives the same result as a two-sided \(t\)-test of \(H_0: \beta_1=0\).

R Lab ANOVA Table

anova(reg_fit)Analysis of Variance Table

Response: hwy

Df Sum Sq Mean Sq F value Pr(>F)

displ 1 4848 4848 329 <2e-16 ***

Residuals 232 3414 15

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Coefficient of Determination

- The coefficient of determination \((R^2)\) is the proportion of the variation in \(y\) that is explained by the regression model:

\[R^2 = \frac{SS_R}{SS_T} =\frac{SS_T - SS_{res}}{SS_T} = 1 - \frac{SS_{res}}{SS_T}\]

\(R^2\) as the proportionate reduction of total variation associated with the use of \(X\).

(a) \(\hat{y}_i = y_i\) and \(\small SS_{res} = \sum_{i=1}^n(y_i - \hat{y}_i)^2 = 0\). (b) \(\hat{y}_i = \overline{y}\) and \(\small SS_R = \sum_{i=1}^n(\hat{y}_i - \overline{y})^2 = 0\).

R Lab \(R^2\)

Call:

lm(formula = hwy ~ displ, data = mpg)

Residuals:

Min 1Q Median 3Q Max

-7.104 -2.165 -0.224 2.059 15.010

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 35.698 0.720 49.5 <2e-16 ***

displ -3.531 0.195 -18.1 <2e-16 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Residual standard error: 3.84 on 232 degrees of freedom

Multiple R-squared: 0.587, Adjusted R-squared: 0.585

F-statistic: 329 on 1 and 232 DF, p-value: <2e-16summ_reg_fit$r.squared[1] 0.587Prediction

Predicting the Mean Response

- With predictor value \(x = x_0\), we want to estimate the mean response \(\mu_{y|x_0} = \beta_0 + \beta_1 x_0\).

- The mean highway MPG \(\mu_{y|x_0}\) when displacement is \(x = x_0 = 5.5\).

- If \(x_0\) is within the range of \(x\), an unbiased point estimator for \(\mu_{y|x_0}\) is

\[\hat{\mu}_{y | x_0} = b_0 + b_1 x_0\]

- The \((1-\alpha)100\%\) CI for \(\mu_{y|x_0}\) is

\[\hat{\mu}_{y | x_0} \pm t_{\alpha/2, n-2} \hat{\sigma}\sqrt{\frac{1}{n} + \frac{(x_0 - \overline{x})^2}{S_{xx}}}\]

Does the length of the CI for \(\mu_{y|x_0}\) stay the same at any location of \(x_0\)?

## CI for the mean response

predict(reg_fit, newdata = data.frame(displ = 5.5), interval = "confidence", level = 0.95) fit lwr upr

1 16.3 15.4 17.2

Predicting New Observations

- Predict the value of a new observation \(y_0\) with \(x = x_0\).

- The highway MPG of a car \(y_0(x_0)\) when its displacement is \(x = x_0 = 5.5\).

- An unbiased point estimator for \(y_0(x_0)\) is

\[\hat{y}_0(x_0) = b_0 + b_1 x_0\]

- The \((1-\alpha)100\%\) prediction interval (PI) for \(y_0(x_0)\) is

\[\hat{y}_0 \pm t_{\alpha/2, n-2} \hat{\sigma}\sqrt{{\color{red}{1}}+ \frac{1}{n} + \frac{(x_0 - \overline{x})^2}{S_{xx}}}\]

What is the difference between CI for \(\mu_{y | x_0}\) and PI for \(y_0(x_0)\)?

- The PI is wider as it includes the uncertainty about \(b_0\), \(b_1\) as well as \(y_0\) due to error \(\epsilon\).

R Lab Prediction

## CI for the mean response

predict(reg_fit, newdata = data.frame(displ = 5.5), interval = "confidence", level = 0.95) fit lwr upr

1 16.3 15.4 17.2## PI for the new observation

predict(reg_fit, newdata = data.frame(displ = 5.5), interval = "predict", level = 0.95) fit lwr upr

1 16.3 8.67 23.9